OpenAI Models

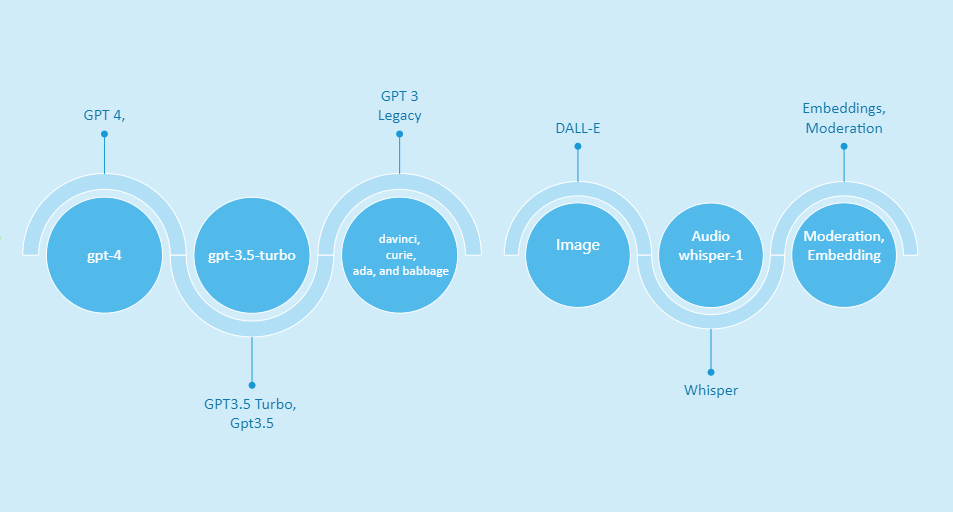

OpenAI provides a diverse set of models with different capabilities and price points. You can also make customizations to the models for your specific use case with fine-tuning. Here is a list of some of the models available:

- GPT-4: A set of models that improve on GPT-3.5 and can understand as well as generate natural language or code.

- GPT-3.5: A set of models that improve on GPT-3 and can understand as well as generate natural language or code.

- GPT base: A set of models without instruction following that can understand as well as generate natural language or code.

- DALL·E: A model that can generate and edit images given a natural language prompt.

- Whisper: A model that can convert audio into text.

- Embeddings: A set of models that can convert text into a numerical form.

- Moderation: A fine-tuned model that can detect whether text may be sensitive or unsafe.

- GPT-3 Legacy: A set of models that can understand and generate natural language.

- Deprecated: A full list of models that have been deprecated.

OpenAI has also published open-source models including Point-E, Whisper, Jukebox, and CLIP. Visit the OpenAI model index for researchers to learn more about which models have been featured in the research papers and the differences between model series like InstructGPT and GPT-3.5.

Continuous Model Upgrades

OpenAI is extending support for gpt-3.5-turbo-0301 and gpt-4-0314 models in the OpenAI API until at least June 13, 2024. Some models are now being continually updated, and developers can contribute evals to help improve the model for different use cases. Temporary snapshots of models will be announced with deprecation dates once updated versions are available.

GPT-4

GPT-4 is a large multimodal model that can solve difficult problems with greater accuracy than previous models, thanks to its broader general knowledge and advanced reasoning capabilities. It is optimized for chat but works well for traditional completions tasks using the Chat completions API.

GPT-3.5

GPT-3.5 models can understand and generate natural language or code. The most capable and cost-effective model in the GPT-3.5 family is gpt-3.5-turbo, optimized for chat using the Chat completions API but works well for traditional completions tasks as well.

GPT base

GPT base models can understand and generate natural language or code but are not trained with instruction following. These models are replacements for the original GPT-3 base models and use the legacy Completions API. Most customers should use GPT-3.5 or GPT-4.

DALL·E

DALL·E is an AI system that can create realistic images and art from a description in natural language. It can create a new image with a certain size, edit an existing image, or create variations of a user-provided image.

Whisper

Whisper is a general-purpose speech recognition model. It can perform multilingual speech recognition as well as speech translation and language identification.

Embeddings

Embeddings are numerical representations of text that can be used to measure the relatedness between two pieces of text. They are useful for search, clustering, recommendations, anomaly detection, and classification tasks.

Moderation

The Moderation models are designed to check whether content complies with OpenAI's usage policies. They provide classification capabilities that look for content in the following categories: hate, hate/threatening, self-harm, sexual, sexual/minors, violence, and violence/graphic.

OpenAI Fine-tuning and Legacy Models

OpenAI provides a diverse set of models with different capabilities and price points. You can also make customizations to the models for your specific use case with fine-tuning.

Fine-tuning Models

Fine-tuning is the process of taking a pre-trained model and training it further on a smaller dataset that is specific to a particular task. This helps the model to specialize and improve its performance on that task. OpenAI provides the ability to fine-tune certain models. The models available for fine-tuning are the original GPT-3 base models: davinci, curie, babbage, and ada.

GPT-3 Legacy Models

The GPT-3 legacy models are the original GPT-3 models that have been superseded by the more powerful GPT-3.5 generation models. However, they are still available for use and fine-tuning. The GPT-3 legacy models include:

- text-curie-001: Very capable, faster and lower cost than Davinci. Max tokens: 2,049. Training data up to Oct 2019.

- text-babbage-001: Capable of straightforward tasks, very fast, and lower cost. Max tokens: 2,049. Training data up to Oct 2019.

- text-ada-001: Capable of very simple tasks, usually the fastest model in the GPT-3 series, and lowest cost. Max tokens: 2,049. Training data up to Oct 2019.

- davinci: Most capable GPT-3 model. Can do any task the other models can do, often with higher quality. Max tokens: 2,049. Training data up to Oct 2019.

- curie: Very capable, but faster and lower cost than Davinci. Max tokens: 2,049. Training data up to Oct 2019.

- babbage: Capable of straightforward tasks, very fast, and lower cost. Max tokens: 2,049. Training data up to Oct 2019.

- ada: Capable of very simple tasks, usually the fastest model in the GPT-3 series, and lowest cost. Max tokens: 2,049. Training data up to Oct 2019.

Model Endpoint Compatibility

Different models are compatible with different endpoints. For example, the gpt-4, gpt-4-0613, gpt-4-32k, gpt-4-32k-0613, gpt-3.5-turbo, gpt-3.5-turbo-0613, gpt-3.5-turbo-16k, and gpt-3.5-turbo-16k-0613 models are compatible with the /v1/chat/completions endpoint. The text-davinci-003, text-davinci-002, text-davinci-001, text-curie-001, text-babbage-001, text-ada-001, davinci, curie, babbage, and ada models are compatible with the /v1/completions (Legacy) endpoint.

For more details, visit the OpenAI Platform.

Data Usage

As of March 1, 2023, data sent to the OpenAI API will not be used to train or improve OpenAI models unless you explicitly opt in. API data may be retained for up to 30 days, after which it will be deleted (unless otherwise required by law). For trusted customers with sensitive applications, zero data retention may be available.

How OpenAI Uses Your Data

Your data is your data. OpenAI does not use the data sent via the API to train or improve its models unless you explicitly opt in. API data may be retained for up to 30 days to help identify abuse, after which it will be deleted (unless otherwise required by law). For trusted customers with sensitive applications, zero data retention may be available. This data policy does not apply to OpenAI's non-API consumer services like ChatGPT or DALL·E Labs.

For more details, see OpenAI's data usage policies.

Default Usage Policies by Endpoint

Different endpoints have different data usage and retention policies. For example, the /v1/completions, /v1/chat/completions, and /v1/edits endpoints do not use data for training and have a default retention of 30 days, and are eligible for zero retention. The /v1/audio/transcriptions, /v1/audio/translations, and /v1/moderations endpoints have zero data retention by default.

For more details, see OpenAI's API data usage policies and to learn more about zero retention, get in touch with the OpenAI sales team.